Tuesday, November 21, 2023

Of Planning Tools and Synaptic Connections

Saturday, October 28, 2023

To Go or Not to Go --> (Urban Planning and the Distance Decay Function)

The fine art of problem articulation

The important thing about mathematical urban models is not the mathematics itself but its application to simulate urban phenomena that we are trying to understand. Therefore, even a failed attempt at creating a model may help an urban planner understand and articulate a phenomenon with greater clarity. Trying to explain an urban phenomenon in the form of an equation compels us to cut through the fog of vagueness and confusion in our minds and seek clarity.

Try to imagine what we expressed by the equation --> A=f(B, C, 1/D) in the previous blog and then try to explain it in words instead of the equation.

We would have to say something like - "When we consider shopping or any such pattern in a city...it depends on how many people are shopping....where they are shopping...it depends also on which shopping areas are large or attractive...also we must consider which are far away or close by...its a pretty complex process....but also very basic etc etc"

While the equation used just 5 letters and 1 digit, the verbal explanation used about 250 letters (and counting).

This is not to say that the equation is better than the description, but that one should attempt to formulate an equation from the description - if only to check if that is even possible. It is an iterative process where a description helps us to form an equation and the equation in turn helps us to give a clearer description and so on.

Distance decay and the Gravity function

Distance is a crucial topic in urban planning. As we have seen in the previous blog, "distance", in this case, is not just a physical distance, but the measure of difficulty (or ease) involved in reaching where we wish to reach -- it could be kilometers of roads; delays due to traffic jams; the high cost of petrol; the physical and emotional stress of spending hours in travel etc.

The funny thing with transportation is that it is the only land-use that does not exist for itself, but for the primary purpose of facilitating interaction among other land-uses. We are generally not on a road because we want to "go" to the road. We are on it because it links where we are (our point of origin) to where we wish to go (our destination).

The other funny thing is that distance...decays.

What this essentially means is that when distances increase between us and a particular destination, our desire to go to that destination also decreases (or decays). This is easy to imagine. Let's say that there are two cafes which are equally attractive to you. Would you rather go to the one that is 500 meters away from you or to the one which is 12 kilometers away ?

This is what we expressed in our equation as 1/D, i.e. as D increases the A (number of trips) decreases. But by how much ?

Way back, in the 1930s, the American economist William J. Reilly discovered from his empirical studies on the flow of retail trade, that the volume of trade between cities increased in direct proportion to the population of the cities and in inverse proportion to the distance between the cities. The trade not only decreased but it decreased in proportion to the square of the distance between cities.

Based on these observations, Reilly formulated a law which states:

Mathematically it is expressed as -

Ri = Pi / d2ki

Where Ri is the attraction of city i felt by city k; Pi is the population of city i; and dki is the distance between city i and city k. It has an uncanny similarity with Newton's law of gravitation where the attraction between two bodies also decreases in inverse proportion to the square of the distance between them.

Reilly tested his model extensively by studying the breaking point between cities in the United States.

If we accept Reilly's findings for now, then we have already developed our original equation further and we now have this -

Aij = f(Bi, Cj, 1/D2ij)

Nothing Super-Natural about it

It is clear from the way we constructed our equation, that there is nothing super-natural or god-given or mysterious about any of it.

We are just trying to analyse and describe how a certain kind of spatial interaction takes place.

This implies that there is nothing holy about the fact that the distance is raised to the power 2. Depending on the local context, it may be something else. In fact, empirical work over the years has shown that it tends to vary between 1.5 and 3 depending on a range of contextual factors.

However, many planning text books in India (including the venerable book on transportation planning by L.R. Kadiyali which every planning student is familiar with) continue to use distance to the power 2 without explaining that while it was derived out of extensive empirical research, that research corresponded to large cities in the United States, separated by an average distance of about 100 miles and was conducted in the 1930s.

(The fact that the equation still holds was precisely due to Reilly's empirical rigour - something that our scholars and researchers tend to shy away from most of the time.)

In reality you are free to play around with the power of D in order to check which number makes the equation simulate an observed reality best. Consider the following graph which shows how the distance decay curve would vary if we assume distance to decay if we raise the D parameter in the equation to a power of 1 or 2 or 3 -

This graph shows how quickly the likelihood of traveling a certain distance will decline if we increase the power of D in the model.

As the power increases from 1 (the green line) to 3 (red line) the tendency to travel farther declines. The red line corresponds to a reality where the tendency to travel decreases precipitously when distance increases from 0 to 3 kilometers and becomes almost nil when distance increases beyond 5 kilometers.

Understanding the rate at which the tendency to travel declines based on increase in the distance can help us understand how appropriately or inappropriately important facilities are located with respect to each other in a city. It can also help us to concretely evaluate planning decisions aimed at locating facilities in a certain way.

In the next blog we will play around with this equation a bit more by applying it to situations familiar to us.

Monday, October 23, 2023

Overcoming the fear of mathematics in planning education and practice

A vital contradiction in our education system

The former chief scientist of Airbus, Jean Francois Geneste said in a brilliant talk delivered at Skoltech, that when it comes to large and complex systems,

What he said has great implications for our own field of urban and regional planning too. It is important to measure and to measure correctly, before planning decisions affecting millions of people, thousands of businesses and hundreds of hectares of land-uses of different kinds can be taken.

Yet, precisely when there is a growing fascination with data and digital technologies, there seems to be a relatively low understanding of the role of mathematics in planning. A substantial part of the problem lies in the fear of the subject itself and the inability to apply it effectively in real situations.

We are all aware, that due to the peculiar limitations of the Indian education system, there is a rigid and unnatural separation between the sciences and the arts.

This leads to a situation where people trained in engineering techniques are often completely devoid of an awareness of social issues and of creativity; and the people trained in humanities are often clueless when it comes to physics, mathematics etc.

Truth be told, this challenge exists in urban planning education outside India too, though, perhaps, not as severely. As Brian Field and Bryan Macgreggor noted in the preface to their book on forecasting techniques,

The important word here is numerate - which means having a knowledge of mathematics and the ability to work with numbers. It is the mathematical counterpart of the word literate, which is the ability to read and write.

The complex is essentially simple

The complex is essentially simple, because it is also a function of our learning, experience and skill. To someone who has never stepped into a kitchen, even making a cup of tea may seem like a forbiddingly complex task. However, to most people it is just a regular task -- a simple task.

The funny thing is that mathematics seems more difficult when it is taught but it seems easier when it is applied !

When it is taught - especially in our schools - its difficulty is cranked up to meet the needs of the engineering entrance exams, whose purpose is to eliminate large numbers of students through a process of cut-throat competition. It is easy to see that this "goal" has nothing to do with solving practical problems of life and society.

Even when students master that gigantic syllabus and get extremely high grades, they may not have internalised the logic behind the topics and may fail to apply them creatively in real life situations.

However, when one begins to study science because one wants to understand and solve real life tasks, then the relevance and applicability of the topics are automatically evident and the human mind understands and internalises them faster.

Let's try to understand this using an example of a model, where we go from the simple to the complex and then realise that it's essentially simple.

Distance decay and equations that make you run away

Urban models are essentially mathematical equations that describe essential features of an urban system and can enable us to simulate and predict its behaviour.

Consider the following equation from Field and Macgregor's book (I will refer to this book and David Foot's book on operational urban models many times in these blogs, as they are just brilliant) -

A = f(B, C, D)

This equation basically means that the variable A is a function of (that is, in some way, depends on) three other variables - B, C and D. Therefore, the value of A will change if there are changes in the values of B,C and D.

But what do A,B,C and D stand for ?

Let's assume that we want to understand how many shopping trips are made from various residential areas in the city to commercial areas. We can describe the components of our model in this way --

A = Number of trips made for shopping purposes (what we wish to find out)

B = Population of the residential area

C = Number of shops in the commercial area

D = Distance between the residential area and commercial area.

But what to do if a city has multiple residential areas and multiple commercial areas and sometimes the residential areas are also commercial destinations and vice-versa ? We would like to express our equation in a way that shows interaction between any number of residential zones and any number of commercial zones.

We do it by introducing two more variables - i and j (where i stands for any residential zone and j stands for any commercial zone) and re-writing our equation thus -

Aij = f(Bi, Cj, Dij)

Where -

Aij - number of shopping trips made from zone i to zone j

Bi - residential population of zone i

Cj - number of shops in zone j

Dij – distance between zone i and zone j

Constructing mathematical models is a creative process

Friday, October 13, 2023

Automating Planning Tasks 2 - (Programming with GRASS + Linux)

In the first part of this blog we had discussed how the various steps of a particular planning problem (in this case the slum-proofing problem of Jaga Mission) can be articulated, algorithmised and then automated. In the second part we shall see how the specific commands in the computer program work.

The technical steps necessary for achieving the goal (given the capabilities and constraints of government organisations executing the mission) were identified and are listed below -

(a) Identify the location of existing slums

(b) identify vacant government land parcels near the existing slums

(c) check them for suitability

(d) generate map outputs for further visual analysis and verification.

It is clear that the problem solution involves spatial analysis tasks to be performed on a Geographic Information System (GIS) software.

While QGIS is the more familiar and user-friendly option, my preferred software for tasks like this is GRASS, which stands for Geographic Resources Analysis Support System.

GRASS is an extremely powerful and versatile open-source software, which was originally developed by the US Army - Construction Engineering Research Laboratory (CERL) and then by OSGEO - The Open Source Geospatial Foundation.

A very useful feature of GRASS is that when you undertake any operation on it (e.g. clipping features in one map layer using features in another map layer), the command line version of the operation is also shown.

This is because GRASS is not just a point-and-click software (software which rely on a Graphical User Interface - GUI - for performing the operations by clicking with a mouse), but it also contains a command line option.

Most computer users are unfamiliar with the Command Line Interface (CLI) - even intimidated by it (I certainly used to be not so long ago). They, therefore continue to work on the user-friendly environment of the GUI and doing all their tasks with the mouse. However, by doing that they fail to harness even a fraction of the power of these wonderful machines - there is a reason why users who work comfortably with the CLI are called Power-Users. I have written about the advantages of the CLI in earlier blogs.

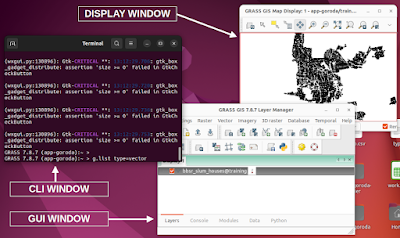

When GRASS is launched, it opens three windows simultaneously - the Graphic console (for performing operations using the mouse) ; a display window (for viewing the maps and results of operations) ; and a command line window (for performing tasks by typing in commands).

An interesting thing happens when you do any GIS operation on GRASS. Let's say you wish to execute the module called v.extract , which creates a new vector map by selecting features from an existing map (quite like exporting selected features to a new map layer in QGIS). The series of steps is similar to that in QGIS, where you specify the input file name, the output file name (of the new file), any queries that specify which features are to be selected etc.

You will notice, that while you are specifying these details by clicking with the mouse and entering file names, the following line is getting typed at the bottom of the module window -

This line is basically the command line version of the v.extract operation. This is how it reads --

< v.extract input=bbsr_slum_houses@training where="ward_id = 16" output=ward_16_slum_houses --overwrite>

The command begins with the name of the module, then specifies name of input file, name of input file and the sql query (in this operation the query selects all slum houses that are located in ward 16). The optional --overwrite can be added if you run the operation multiple times and would want the previous output files to be overwritten automatically (useful during scripting/programming).

When you click the "copy" button in the module window, this command line gets copied to memory. You can then paste it in a text file to make it a part of your program.

A good practice is to run all the operations manually once using the mouse, just so that you are clear about all the operations and all the command line versions of the operations. With practice you would not need to copy the commands. You will simply be able to type them out from memory or my referring to the manual page of the respective module.

Turning individual commands to a script

Commands are very powerful because instead of clicking with a mouse many times, you can just type a short line and get the job done. But the main power of the commands is that you can write a bunch of them down in a sequence in a text file and execute it as a program to automate all your computing tasks.

Consider the following 4 commands written down in a sequence -->

(1) v.buffer input=centroids output=buffer type=point distance=$buffer

(2) v.clip input=orsac_tenable clip=buffer output=vacant

(3) v.out.ogr --overwrite input=vacant output=$LAYER_OUTPUT/$ulb\_vacant_$buffer\_m_buffer.gpkg format=GPKG

(4) db.out.ogr --overwrite input=vacant output=$LAYER_OUTPUT/$ulb\_vacant_$buffer\_m_buffer.csv format=CSV

Of course, it looks a bit overwhelming ! But nothing to worry, cos everything useful feels a bit overwhelming at the start. Here is what is going on -

In step 1, the v.buffer module draws a buffer of user defined distance around the centroids of slums and saves it in a file called buffer. In step 2, the v.clip module clips features from the land parcels layer using the buffer layer file. In step 3, the v.out.ogr module exports the clipped land parcels as a gpkg vector file and stores it in your chosen folder. In step 4, the db.out.ogr exports the attribute table of the clipped file (containing details of the land parcels) as a comma separated value (csv) spreadsheet file and stores it in your chosen folder.

When you run these commands as a script then all these tasks get performed automatically in this sequence.

It is clear from the above, that you can tie together any number of commands in an appropriate sequence to handle analytical tasks of varying levels of volume and complexity.

GRASS + BASH

Bash - an acronym for Bourne Again Shell - is basically a "shell", a program in Unix like operating systems such as Linux, which is used to communicate with the computer i.e. it takes keyboard commands and then passes them onto the operating system for execution.

The unique thing about Bash is that it is not just a powerful method for passing commands to the operating system but also an effective programming language.

The advantage of combining GRASS with Bash is that you can take the GIS commands of GRASS and use them as it is in a Bash script without any change in syntax by adding a flag called --exec at the start of the command.

By doing that you can not only execute the GIS tasks but combine those tasks with all the other programs and the full power of your Linux computer.

For example, in the same script, you can select GIS files of a few specific cities, undertake all the operations that your need to undertake, then save them in a separate folder, extract the attribute data as csv files, undertake data analysis tasks on them, put them in some other folder, turn both the spreadsheets and the output maps into pdf files etc etc.

I guess this is already somewhat of an overload...and I feel tired of typing too ! I will show the combination with Bash in the next blog.

The request and the reward

Using the command line and scripting with GRASS and Bash are extremely powerful, flexible and fun processes, but they do request a readiness to learn and an openness to be creative.

The rewards...well they are immeasurable.

Thursday, September 28, 2023

Data and its Non-Use

Tackling the dynamic with the static

One of the primary challenges facing the management and governance of our cities is the fact that they are extremely complex and dynamic systems. At any point of time, the decision-maker has to juggle multiple unknown and possibly unknowable variables. Dealing with our cities, therefore, must be seen as a fascinating challenge of having to deal with uncertainty.

But how does one achieve it ? How does one master the uncertain and the unknown? There are many ways of doing it, which, unfortunately remain unused and abandoned by our city planners and decision-makers. Our planners, obsessed with the preparation of voluminous master plans, often ignore something very fundamental - you cannot tackle something extremely dynamic with something extremely static.

As the renowned architect-planner Otto Koennisberger had already observed six decades ago when he was preparing the master plan of Karachi, that by the time such master plans are ready they are already out of date. In such a situation not only do the decisions of the planners hit the ground late, but the feedback is hopelessly delayed too.

The importance of feedback...and of cognitive dissonance

Anyone dealing with complex systems would be familiar with the crucial value of feedback. A system that continuously fails to correct itself in time is a doomed system.

Yet, are things like handling vast amounts of data, taking decisions in real-time, continuous course-correction based on feedback through a network of sensors really such insurmountable problems in the present times ?

On the contrary, a characteristic feature of the present times is not only a complete technological mastery over these challenges but also the increasing affordability and accessibility of such technology. Does Google maps wait for a monthly data analysis report to figure out how many people had difficulty reaching a selected destination and modify its algorithm accordingly? It shows an alternate route instantly when it senses that the user has mistakenly taken a wrong turn.

We take this feature of Google maps as much for granted as we take a 20 year perspective plan of a city for granted - such is the collective cognitive dissonance of out times.

Tackling uncertainty by shortening the data collection cycle

Let us consider a problem more serious than reaching the shopping mall successfully using google navigation. We are all aware of the havoc that flash floods cause in our cities. They are hard to anticipate because they can occur within minutes due to extremely high rainfall intensities. As a consequence of climate change we can only expect such events to turn more erratic and intense over time. While the rain happens for a short duration, the available rain gauges still measure the average rainfall over a 24 hour cycle. Therefore, despite collecting vast amounts of rainfall data, we may still not be able to use it for predicting the occurrence of flash floods.

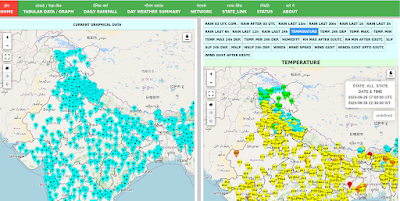

However, in the last few years, the Indian Space Research Organisation (ISRO) and the Indian Meteorological Department (IMD) have installed Automatic Weather Stations (AWS) at various locations across the country which record rainfall data at intervals of less than an hour. Based on the data from the AWS we not only get better data sets for analysis but can respond in near real-time when the event happens.

In this case, we tackled the uncertainty of the rainfall event by reducing the data-collection cycle from 24 hours to less than an hour. We didn't eliminate uncertainty but we definitely limited it.

While tackling complex and uncertain situations, our main allies are not needlessly vast quantities of data but the ability to clearly articulate the problems faced and then attempting to algorithmise the problem - the more articulate the problem statement, the more effective the algorithm.

Algorithmising Jaga Mission tasks

Let's take another example of Jaga Mission - Government of Odisha's flagship slum-empowerment program. Jaga Mission has arguably created the most comprehensive geo-spatial database of slums in the world. Its database consists of ultra-high resolution (2 cm) drone imagery of each and every slum in the state.

But the vastness of the data by itself achieves nothing - except increasing the headache of custodians of the data who do not possess necessary data handling skills.

It is only when the variables contained in the data are identified and linked with each other, that one can feel its true power.

The database essentially consists of four main components for each of the 2919 slums of the state -

(c) The slum houses map layer (containing household information)

(d) The cadastral map layer (showing land-parcels and ownership)

These four components can be combined in myriad ways to tackle a whole range of complex problems encountered during implementation.

Does a city wish to know whether some slums lie on land belonging to the forest department in order to avoid problems during upgrading ?

No problem! Just filter the forest parcels in the cadastral layer (d) and find out exactly which households are affected by creating an intersection with the slum houses layer (c). Similarly, total area lying on forest land can be found by creating an intersection with the slum boundary layer (b).Monday, September 25, 2023

Automating Planning Tasks - Part 1 --> (100 mb Powerpoint file Vs 3 kb text file)

What computers were not meant to do

"But in running our institutions we disregard our tools because we do not recognise what they really are. So, we use computers to process data, as if data had a right to be processed, and as if processed data were necessarily digestible and nutritious to the institution, and carry on with the incantations like so many latter-day alchemists."

- Stafford Beer, 'Designing Freedom'

The cyberneticist Stafford Beer wrote these lines in his typical humorous style way back in the 1970s.

Despite Beer's best efforts, in the years since the publication of his essays and with the tremendous increase in the processing power, storage capacity and affordability of modern computers, this obsession with data has also kept on increasing till it reached the ludicrous levels that we see today when the act of collecting of vast amounts of data itself justifies the purpose for collecting vast amounts of data.

A particularly tragic situation is one which is quite typical in the offices of the urban development sector (the field which I am most familiar with) and involves highly educated professionals spending tens of person-hours preparing graphic-heavy power-point presentations. Nothing against power-point at all ! It is a great software. The problem lies in this undue importance that professionals in the development sector feel obligated to attach to visual presentations and the time and effort they end up dedicating to the task.

Instead of making a clear presentation of the activities being undertaken by the organisation (the main purpose of a software like power-point), the making of the presentation itself becomes a big chunk of the activities being performed by the organisation.

And these files are heavy ! Tens of mega-bytes just for making the whole thing cluttered with images, data visualisation charts, animations etc.

The same philosophy extends to online dash-boards and cluttered charts that urban planning graduate students in India increasingly make for their project presentations.

Whether by design or not, the only effect such presentations have is to visually overwhelm and confuse the viewer, and not bring clarity to the topic being discussed.

We have all seen those bloated power-point files...no need to share examples of those eye-sores here.

Now let's see instead the power of a simple text file containing a script, and with a size of only 3 kilobytes.

The 3 kb text file

The following screen-shot is of a program I wrote for automating the technical steps of the slum-proofing vertical of Jaga Mission - the landmark slum land titling and upgrading initiative of the Government of Odisha.

I will explain the slum-proofing vertical in detail in another blog. In this one I will just outline the structure of the program.

The process involved certain very concrete technical steps - (a) Identify the location of existing slums (b) identify vacant government land parcels near the existing slums (c) check them for suitability (d) generate map outputs for further visual analysis and verification.

The program automates that planning process by performing the following steps -

1) selects a user-designated city from the list of total cities;

2) draws buffers of user-designated radius length around the centroid of each slum;

3) clips suitable land parcels (i.e. filters out categories such as waterbodies, ponds, tanks, forests etc) that fall within the buffer from the cadastral map layer containing vacant land parcels owned by the government;

4) calculates the total vacant land available and approximate number of households that could be accommodated;

5) outputs a report stating the total vacant land available and total residential plots that could be created on that land assuming a plot size of 30 sqm and 60 percent land coverage by residential plots.

6) outputs vector maps of the vacant land parcels for further visual scrutiny and human analysis

7) outputs maps in pdf format for a quick look by team members unfamiliar with GIS and for printing out.

It took a few seconds for this process to be completed for a city that contained about 40 slums.

If the user would like to change the city or alter the buffer distance (for example, if suitable land is not available within the buffer of the designated radius length), it can be easily done by just typing the desired inputs in the prompt asking for the city code and the buffer radius.

Considering the fact the the Mission involves 115 cities and 2919 slums, this program shortens the analytical process by orders of magnitude and allows time to be devoted to study the outputs, have discussions, refine the overall strategy and assess the probability of effective implementation.

And most importantly, writing such programs is an extremely interesting, fun and creative process.

Have fun doing creative work and automate the rest...what could be more delightful than that ??

The size of the text file that contains this program and undertakes all these tasks in a matter of seconds is 3 kilobytes.

Is it so hard to see which one is really our friend and ally ??

To part 2...

Saturday, July 15, 2023

Smartness...without the Stutter - what India's Smart Cities Mission could be about

For example, one should not attempt to either shape or evaluate the Smart Cities Mission using metrics that one could use for AMRUT, PMAY or the Swachh Bharat Mission.

If one attempts to measure "intelligence" using metrics like the number of integrated command and control centres made, or the ridiculously high figure of lakhs or crores spent on the 5858 smart city projects or that other wholly irrelevant variable - numbers of place-making projects implemented - then one may totally lose ones way and end up with a complete hotch-potch of things where anything and everything could be described as a smart city.

Perhaps, this is exactly what made the present minister, former seasoned diplomat and articulate speaker, appear rather at a loss for words while describing the achievements of the Smart Cities Mission at the National Urban Planning Conclave in July this year. You can listen to him here.

The fact that while talking about the command and control centres he could only mention their role as war rooms during covid says a lot about the lack of clarity regarding one of the most significant components of the Smart Cities Mission. Yet, it is precisely the points that he mentioned and the manner in which he mentioned that reveal what is fundamentally problematic in the conceptualisation of the Smart Cities Mission.

Not a separate mission...but the brain linking all missions

To see the Smart Cities Mission as a distinct mission itself seems to be an erroneous approach. Instead of trying to create a green-field "smart city" or develop parts within the city as area based development zones or initiate a random traffic signaling project here or a place making project there, the smart cities mission should be about being the "brain" that links, coordinates and charts out the path for all the various urban missions and activities being undertaken in a particular city. It should be the "intelligence" that guides the actions of all the on-going and future missions.

Seen in this way, there would be no need to justify the achievements of the mission by quoting figures for numbers of smart parks developed or the expenditure incurred in the construction a super-duper integrated command and control centre building.

Unfortunately, the work of being the brain is precisely what the smart cities mission has not been able to do. In order to do that one needs the freedom to adopt a way of thinking that focuses on how things are being implemented rather than explicitly focus on what is being implemented -- on effectiveness and functionality rather than appearing cool and spewing buzzwords.

One has to somehow initiate a "capacity building" programme for the very politicians, government officials and private sector consultants who are engaged with the smart cities mission....for, I hate to say it, but they really don't seem to have a clue when it comes to the science of creating intelligent systems capable of tackling complexity and uncertainty.

The fact that the mission is often under vigorous criticism from a bunch of even more clueless social and political scientists, journalists and activists is another reason for its inability to correct its course. This crowd is even more difficult to handle because its members are not only comfortably ignorant of the fact that they are completely ignorant but also pretty smug in their confidence that their critique is spot on.

What Smart systems are like

In my early days of exploring the smart cities mission I often came across this trivia that the concept of smart cities has its roots in some products and systems developed by the IBM company.

Well, if that is so, then how do we explain the following lines written in 1965 ?

"Planning is not centrally concerned with the design of the artefacts, but with a continuing process that begins with the identification of social goals and the attempt to realise these through the guidance of change in the environment. At all times the system will be monitoring to show the effects of recent decisions and how these relate to the course being steered. This process may be compared to that encountered in the control mechanisms of living organisms, part of the subject matter of cybernetics."

- J.B. Mcloughlin

The highlighted line in the passage is precisely the task that the integrated command and control centres should be performing. And as Mcloughlin righly points out, the roots of such a continuous and dynamic style of planning (which strongly resembles a Smart City approach) lie in the fields of cybernetics and operations research.

How can a system develop a kind of "intelligence" where it can take decisions and optimise its course by continuously receiving feedback from a variety of sensors. The principle of automatic control is built into such an approach. At its most basic it could be something as simple and effective as a water tank with a floating valve stopper that stops the flow of water into to the tank when the water reaches a certain level and then resumes it again when it falls below that level.

And at a large and advanced level it could be something like this -

This is a diagram of an interconnected power generating system. The components comprising the system and shown in the diagram were described by the author A.A. Voronov as follows -

"A few hydroelectric stations (A) and the thermal stations (B) tied into a ring network operating on a common constant load (C) under direct digital control from a control room (D) common for all the utilities."

- A.A. Voronov, "Specific Features Involved in the Development of Large Automatic Control Systems"

The component (D) highlighted by me in the line above and shown as "Supervising Computer" in the diagram IS an Integrated Command and Control Centre.

It is not about what kind of building it is located in, but the function that it performs that is important.

And what is that function ? Voronov explains it as follows -

"Depending on weather conditions the amount of water collected and stored in the station reservoirs can vary significantly. At a low water level the hydroelectric stations should cut their daily discharge from the reservoirs so as to conserve the accumulated water. To compensate the reduced capacity of the water stations the network has to increase the amount of fuel burnt in the thermal units, which implies increased requirements for railway transportation delivering this fuel. Conversely, with high water levels in the reservoirs, the requirements for railway transportation are reduced accordingly.

The algorithm computing these optimal powers is implemented on the supervising computer of the system. From the general incoming information visualised on the display the operator may adjust the algorithm to take into account the changing external conditions of the system such as fuel characteristics, flooding terms, and so on."

The network described above coordinates varying hydrological conditions; power generation and freight transportation through necessary algorithms working through the integrated command and control centre.

Does anything more need to said to explain what a smart and intelligent system should be doing ?

And by the way, this example is from a book published 40 years ago !

When one starts to understand what such systems are really about and for how long they have been around then one can start going a little deeper than be preoccupied with scientifically profound concerns such as terminators enslaving humanity.

Consider the following observation by the remarkable Soviet mathematician Elena S. Wentzel -

"Even with totally automatic systems of control which seem to make decisions with no human interference, the judgement of a human is always present in the form of the algorithm employed by the system.

The functions of the human are not taken up by a machine, rather, they shift from a basic level to a more intelligent level. To add more weight to the argument, some automatic control systems are developed so that the human may actively interact to aid the process of control."

- Elena S. Wentzel, "Operations Research: A Methodological Approach"

And by the way, the above lines are from a book published 43 years ago !

As I have discussed earlier, the tremendous increase in the processing power of computers, the growth of GNU/Linux and the free and open-source software (FOSS) movement and the availability of open data allow us to develop such systems on our own laptops and desktops. All it requires is the courage and the willingness to learn things that are important for our work but are, as yet, unfamiliar to us.

It is always more liberating to learn and do, than having to pretend and defend.

In the forthcoming blogs we will continue to look at how such systems can be developed to tackle complex real world problems, using the resources that are readily available to us.

Thursday, July 13, 2023

Split City - have our urban missions caused multiple personality disorder in our cities ?

I had written in an earlier blog how the urban development process in India has overcome the "plan-was-good-but-implementation-was-poor" impasse by abandoning planning itself. And by planning I am referring not to the oft encountered terms such as "governance", "resilience", "sustainability" etc. etc. which end up meaning pretty much everything (and therefore - nothing), but the art and science of making sense of the future in order to better prepare for it.

As Brian G. Field and Bryan D. MacGregor wrote:

"Planning is a process of analysis and action which is necessarily about the future. It involves intervention to manipulate procedures or activities in order to achieve goals. Forecasting is crucial to such a process."

- 'Forecasting Techniques for Urban and Regional Planning'

Our political leaders and government officials love to mention in public speeches how the length of roads and pipes built in the country could well nigh be measuring tapes for the solar system and the number of dwelling units built under our housing programs could house the population of certain developed countries six times over (that is, provided they agree to stay in homes of the size of 25 to 30 square meters. I have been involved in building a few of these...I have some idea).

But the mere reaching of astronomical numbers and sizes is not a measure of the functionality of the amenities created.

I wrote in the blog how new affordable housing units ended up getting constructed under an earlier central government scheme right next to a slum which was being upgraded under a current state government scheme.

Presumably, the affordable housing scheme (intended to house the residents of the slum), and the slum upgrading scheme (intended to keep the slum residents in their existing settlement) were not exactly on talking terms. The result was a creation of new housing units for households that didn't need them anymore.

The phenomenon is commonly described by concerned folk as the challenge of "working in silos". The solution prescribed is often a healthy dose of the magic medicine called "convergence".

But the problem is a bit more complex than remaining and operating in silos. It seems to be one of full blown Dissociative Identity Disorder (or split personality disorder).

Split City Syndrome

Working in silos would suggest that a particular unit dealing with a project or its part is not communicating adequately with other units dealing with related projects or other parts of the same project.

However, a multiple personality syndrome would be when the same unit acts like totally different entities when dealing with separate parts of the same project or with different but inter-related projects. For example, when working with Swachh Bharat Mission the same planning office may be oblivious of the fact that Pradhaan PMAY houses contain toilets and then while working with PMAY, it may forget that toilets for the targeted households may already have been constructed under SBM. This transition may happen seamlessly over the hours of the same working day.

This is not mere speculation. This is exactly what's going on in the field, in the cities and towns of India (comfortably far away from the large halls of Vigyan Bhawan where the National Urban Planning Conclave was recently held).

While attempting to saturate each and every slum settlement of a state with individual household level toilets, the alarm suddenly rings that the households that have applied for PMAY funding for benficiary led house construction (BLC) should be factored in.

That is certainly a wise thing to do, except that "factoring it in" effectively translates into counting the houses that have applied for PMAY BLC funding as "toilets that have already been constructed."

When thinking about toilets, the SBM personality dominates. Like a hammer seeing everything as a nail, that personality trait sees everything as a toilet. It eliminates all other rooms in a BLC house and sees only the toilet. It cares not if the house has been built or not. Then when the time comes to look at what is going on with the PMAY BLC house construction, all hell breaks loose, because it is realised that the construction has not even started as funds from the centre have not yet been released. When the PMAY personality trait dominates, then the SBM trait dissolves -- only the ghosts of toilets in yet-to-be-built houses populate the spreadsheets of SBM.

Convergence is desirable...but not at the top

The attempt to use the concept of "convergence" to link and coordinate the various mission verticals is a good idea. Overcoming the analysis-paralysis of long drawn planning exercises was necessary. If planning lags behind reality by an ever increasing margin then such planning is useless. As Otto Koenisberger had remarked already in the 1960s, after his experience with plan making in Karachi, that by the time the plan was prepared it was already out of date.

However, an implementation frenzy of the kind we are seeing in our cities, where the various mission verticals try to maximise their own outputs without paying any heed to how other missions are intertwined with it, is not desirable either.

Advances in technology and computing make it possible to have dynamic, real-time systems that can coordinate the functioning of inter-connected mission verticals. The so called "convergence" of these missions is not supposed to happen at a higher level of decision-making - for example, an over-arching department of planning and convergence or in the office of a senior IAS officer who is in-charge of multiple mission streams.

It is supposed to happen throughout the network of the mission streams and through the rank-and-file of the organisational system that is in-charge of the implementation.

More on that in forthcoming blogs...

Tuesday, July 11, 2023

Open Data...or Open Disdain ? Cognitive dissonance and Urban India's Open (GIGO) Portals

Have you even visited your own "Open Data" portals ?

In Satyajit Ray's classic film "Jana Aranya", the character played by Utpal Dutt asks a banana seller if he had ever himself tasted the bananas that he sells.

Better hear the voice of the great Utpal Dutt yourself, and keep the tone in your mind -

We can then ask the officials and consultants of India's various urban missions in the same tone, if they have ever visited their own "open data" portals.

A few months ago, at a conference on the smart cities mission, a senior official of the mission said that he was very glad that so much data was now available freely to the public through open data platforms such as the Open Government Data (OGD) platform , the Indian Urban Data Exchange (IUDX) platform etc.

One wonders how he could say something like that at a public forum and how none of the die-hard supporters and critics of the mission in the audience had no questions regarding such a statement.

Open data and my neighbour's laundry list

I have made multiple visits to both the above platforms and downloaded various data files. Never have I ever found anything with any more usefulness or relevance to my work than ... let's say....my neighbour's laundry list. It seems as if the professionals tasked with uploading data to these portals found every scrap of excel spreadsheet lying around in their respective offices and dumped them in these digital bins. May be they are rewarded for the sheer number of files that they upload rather than what those files contain.

It is also amusing to discover that individuals who talk

passionately about these platforms have never visited them or have

downloaded any data from them. A large part of the problem is also

the unfamiliarity with the basic standards of data storing and a lack of

clarity regarding the tasks that the data should be used for.

The fact that this useless data is available in a range of file types such as csv, json, ods etc further compounds the irony of the situation.

And of course, let's not forget that entering the website and accessing the data are not always the same thing.

Often you will encounter this at some point -

Or this -

Strangely, when I had checked the portal sometime back, many of the "private" buttons were "open" and coloured a welcoming green. Of course in the name of smart traffic signal data they often contained something as amazing as column containing names of certain squares (all the rest is left to the Sherlock Holmsian powers of imagination and deduction on the part of the website visitor).

Consider the following csv (comma separated value) file available on the OGD portal -

This is all the information that this downloaded csv file contains. The file contains no metadata (which means there is no data on the data itself) such as - when was it uploaded, who uploaded it, which period is represented in the data, what do the fields mean (does "Nos. of IHHL" mean number of individual household toilets under construction or already constructed or targeted ?), does the data correspond to the Swachh Bharat Mission (SBM) or some other project...and, how on earth does a person not dealing daily with Indian development lingo know what "IHHL" stands for in the first place ??...etc. etc. etc.

The incompleteness of the data further renders it useless. Even if we were to assume that the data shows how many toilets have been constructed, what is the use of that if not compared against the total toilets that were supposed to be constructed ? Even if that data were available in another file on the portal, they would not be comparable due to the lack of metadata.

The hard fact regarding any data management process is that any data that does not contain meta-data is garbage data. And considering the inevitable thing that happens when garbage data is fed into any analytical or decision-making system (Garbage In - Garbage Out....aka GIGO)....none of this data should be used by anyone actually trying to do something useful.

Genuine open data portals

It is normal for the "defenders" of these portals to wax apologetic when confronted with these issues with predictable statements such as - "Yes...but it also contains things which are useful...with time it will improve...it takes time to build something like this" etc etc.

There is no need for all that. Just a quick visit to any of the following would give one a clear idea on what serious open data portals should be like.

Bhuvan portal of the Indian Space Research Organisation (ISRO)+National Remote Sensing Centre (NRSC)

Automatic Weather Station portal of the Indian Meteorological Department.

USGS EarthExplorer of the United States Geological Survey.

AND not to forget the wonderful....

At the end it all boils down to this - if you have something serious to do and you know what you are talking about, your data won't have to be a pile of BS...open or not.

.jpg)