Tackling the dynamic with the static

One of the primary challenges facing the management and governance of our cities is the fact that they are extremely complex and dynamic systems. At any point of time, the decision-maker has to juggle multiple unknown and possibly unknowable variables. Dealing with our cities, therefore, must be seen as a fascinating challenge of having to deal with uncertainty.

But how does one achieve it ? How does one master the uncertain and the unknown? There are many ways of doing it, which, unfortunately remain unused and abandoned by our city planners and decision-makers. Our planners, obsessed with the preparation of voluminous master plans, often ignore something very fundamental - you cannot tackle something extremely dynamic with something extremely static.

As the renowned architect-planner Otto Koennisberger had already observed six decades ago when he was preparing the master plan of Karachi, that by the time such master plans are ready they are already out of date. In such a situation not only do the decisions of the planners hit the ground late, but the feedback is hopelessly delayed too.

The importance of feedback...and of cognitive dissonance

Anyone dealing with complex systems would be familiar with the crucial value of feedback. A system that continuously fails to correct itself in time is a doomed system.

Yet, are things like handling vast amounts of data, taking decisions in real-time, continuous course-correction based on feedback through a network of sensors really such insurmountable problems in the present times ?

On the contrary, a characteristic feature of the present times is not only a complete technological mastery over these challenges but also the increasing affordability and accessibility of such technology. Does Google maps wait for a monthly data analysis report to figure out how many people had difficulty reaching a selected destination and modify its algorithm accordingly? It shows an alternate route instantly when it senses that the user has mistakenly taken a wrong turn.

We take this feature of Google maps as much for granted as we take a 20 year perspective plan of a city for granted - such is the collective cognitive dissonance of out times.

Tackling uncertainty by shortening the data collection cycle

Let us consider a problem more serious than reaching the shopping mall successfully using google navigation. We are all aware of the havoc that flash floods cause in our cities. They are hard to anticipate because they can occur within minutes due to extremely high rainfall intensities. As a consequence of climate change we can only expect such events to turn more erratic and intense over time. While the rain happens for a short duration, the available rain gauges still measure the average rainfall over a 24 hour cycle. Therefore, despite collecting vast amounts of rainfall data, we may still not be able to use it for predicting the occurrence of flash floods.

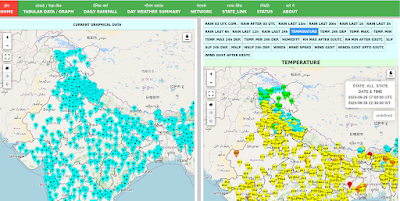

However, in the last few years, the Indian Space Research Organisation (ISRO) and the Indian Meteorological Department (IMD) have installed Automatic Weather Stations (AWS) at various locations across the country which record rainfall data at intervals of less than an hour. Based on the data from the AWS we not only get better data sets for analysis but can respond in near real-time when the event happens.

In this case, we tackled the uncertainty of the rainfall event by reducing the data-collection cycle from 24 hours to less than an hour. We didn't eliminate uncertainty but we definitely limited it.

While tackling complex and uncertain situations, our main allies are not needlessly vast quantities of data but the ability to clearly articulate the problems faced and then attempting to algorithmise the problem - the more articulate the problem statement, the more effective the algorithm.

Algorithmising Jaga Mission tasks

Let's take another example of Jaga Mission - Government of Odisha's flagship slum-empowerment program. Jaga Mission has arguably created the most comprehensive geo-spatial database of slums in the world. Its database consists of ultra-high resolution (2 cm) drone imagery of each and every slum in the state.

But the vastness of the data by itself achieves nothing - except increasing the headache of custodians of the data who do not possess necessary data handling skills.

It is only when the variables contained in the data are identified and linked with each other, that one can feel its true power.

The database essentially consists of four main components for each of the 2919 slums of the state -

(c) The slum houses map layer (containing household information)

(d) The cadastral map layer (showing land-parcels and ownership)

These four components can be combined in myriad ways to tackle a whole range of complex problems encountered during implementation.

Does a city wish to know whether some slums lie on land belonging to the forest department in order to avoid problems during upgrading ?

No problem! Just filter the forest parcels in the cadastral layer (d) and find out exactly which households are affected by creating an intersection with the slum houses layer (c). Similarly, total area lying on forest land can be found by creating an intersection with the slum boundary layer (b).